NYU Shanghai’s auditorium was buzzing with music last month as our Interactive Media Arts (IMA) and Computer Science faculty brought together music technology scholars and artists from around the world to explore digital methods to enhance musical expression performance. Held annually since 2001, this year’s international New Interfaces for Musical Expression (NIME) conference took place mostly online from June 14 to 18, with an onsite day for China-based scholars on June 17.

NYU Shanghai’s onsite day featured in person talks, exhibitions, and live performances that were also live-streamed to a global audience, with over 400 participants logging on from the U.S., Europe, and across Asia.

“Organizing NIME has been a wonderful adventure,” says Assistant Professor of Computer Science Gus Xia, who co-hosted the conference with Visiting Arts Professor of IMA Margaret Minksy.

“We had a fantastic crew of volunteers, without which NIME 2021 would be impossible. We learned how to make decisions when things were uncertain, kept calm when the environment was chaotic, and pushed forward with a clear vision.”

Minsky and Xia served as moderators for the live-streamed interactions and Q&A sessions with three keynote speakers: Roger Dannenberg, Emeritus Professor of Computer Science, Art & Music at Carnegie Mellon University, Yann LeCun, Vice President & Chief AI Scientist at Facebook and Dr. AnnMarie Thomas, director of the Playful Learning Lab and professor in the School of Engineering, the Opus College of Business, and the Center for Engineering Education at the University of St. Thomas.

Dr. AnnMarie Thomas, Yann LeCun, and Roger Dannenberg were the three keynote speakers of this year’s NIME conference.

Over the course of the day, presenters touched on themes of accessibility, AI-human interaction, the artform of sound technology, and how developing music technology can make music education widely accessible—from apps and musical interfaces that give new experiences of traditional Chinese instruments, to course curriculum and invented instruments designed for more engaging, playful, and creative classroom interactions.

Conference organizers deployed a vast array of online tools to ensure virtual conference goers could have a robust experience. Online participants used the messaging platform Slack as the main conference hub, exploring workshops and paper presentations, and attending keynotes and plenary sessions via Zoom. Music track events were hosted on Youtube and Bilili, and participants used a game-inspired 2D spatial audio meeting place called gather.town to video chat with each other using avatars.

The following are highlights from several panel discussions.

Professor Zhou Haihong, vice president of the Central Conservatory of Music and a music psychologist, designed a lightweight, foldable double-keyboard instrument called Walkband—a music interface prototype that allows for many creative possibilities in the classroom such as group teaching and creating ensembles. It can be worn and played while walking or unfolded into two separate keyboards from which two players can perform a duet. A pitch bend wheel imitates the gliding sound of Chinese traditional instruments, and each key can take on one of 96 percussion instruments. Zhou is currently developing an elementary-school curriculum and regularly runs experimental classes to make music education more accessible in China.

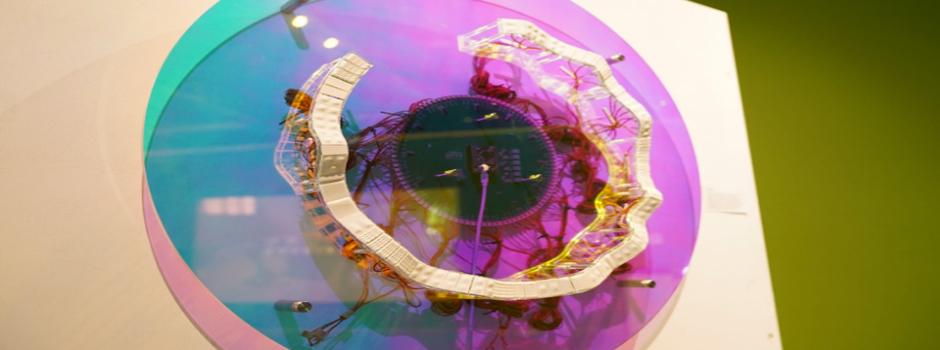

Wang Yun, a Ph.D. candidate from Tsinghua University walked through the hardware and software design process of VORTEX—a tangible ceramic musical interface that when touched by human hands, produces sounds of the Chinese guzheng instrument. To play, users interact with both hands and move their fingers in opposite directions to modulate two audio tracks—the faster the movements are, the faster the tempo plays. Each touch to different modules creates a new layer of tactile information and adds a new sensory track to the music that enriches the user’s experience.

"Smoothness, granularity, [and] warmth are...tactile sensations...often associated with music experiences,” said Wang. “However, since audiences without professional music training activate limited sensory channels while listening to music, they have difficulties understanding the relationship between the textures of sound and the techniques of the musician. This could be a potential research direction for future immersive music performance and experience.”

Three sixteen-year old students used their high school sound lab club—established by Ethan Shan, a music coordinator at Yungu School (founded by Alibaba partners and chaired by Jack Ma)—to create “HER” (Has Equality Re-set?), a digitally-mixed and edited collection of ‘daily life’ sound bytes that they recorded from women of all ages. Shan helped students Gabrielle, Vicky, and Sakura navigate technology such as AIVA AI music, Amazon deep composer, and VCV, to explore creative sonic effects—the result was an empowering walk through the various stages of a woman’s life, voicing thoughts on societal pressure and gender inequality.

"What surprised me is our students not only focused on the application and installation of technology, but they also explored how they could demonstrate their ideology through it,” said Shan.

Bai Xiaomo

“In terms of the form of works, sound installation is very different from computer music and electronic music—the former is spatial art, the latter is stage art,” said new media artist, sound engineer, and deputy dean of the Experimental Art School of Sichuan Conservatory of Music, Professor Bai Xiaomo. Bai observed global spatial artworks since the 20th century and created his own installations while continuing to explore the connection between contemporary music and electronic music. “This is the problem for the sound installation creators: how do we make a complete, unified impression on the audience, from different time windows?”

Yang Xingxing and Wang Hongrui

A simple clap or melody is now all that’s needed to create a complete AI-generated song. Yang Xingxing, a programmer and musician from Stanford and Wang Hongrui, an audio algorithm engineer from Fudan University, demonstrated "AutoBand," a real-time interactive program that uses AI to generate rhythms, melodies, accompaniments, and motions. Their program was inspired by a combination of open-source projects such as GrooVAE, MusicRNN, and MusicVAE and shows just how machine learning has become an impressive application of human-AI creative collaborations.

Scan the QR code using WeChat to try an interactive demo of Autoband.

Xu Zhixin

The possibilities for expanding computer music seem endless, and Zhixin Xu, a composer, sound artist, and computer music researcher who teaches at Shanghai Jiao Tong University, turned the spotlight to the possibilities of RTcmix—a music programming language based on Google Cloud Run. His approach, based on cloud technologies, proposes a smoother alternative to the many “cumbersome” music programming languages used today, especially in settings such as game design.

Michael Sobolak

Michael Sobolak, who currently teaches instrumental, electronic music, and a DIY music makerspace course at Whittle School and Studios in Shenzhen, China, envisions a future where musical experiences are more accessible to students of all ages. Inspired by the “Maker Movement,” he’s designing a curriculum to foster creativity, expression, and performance among students who he hopes will dream, design, build and perform on instruments of their own invention.

"The use of music technology in education can achieve the purpose of improving students' artistic and scientific skills, changing the music learning scenario in the form of technology and allowing creativity to be fully reflected,” said Yi Qin, associate professor of Shanghai Conservatory of Music. Yi showcased a set of interactive instrument prototypes that demonstrate sensing technologies such as color recognition, distance/sound/light sensing, and a graphical programming interface suitable for learning and operating. Using this model, students can easily create their own intelligent musical instruments, which means a more efficient, easy and fun means of learning music.

This year’s NIME 2021 conference was sponsored by Abelton, Populele, bela, Tinkamo and NYU Shanghai.

--

Learn more about New Interfaces for Musical Expression (NIME).